This is the second part of the "Whose entropy is it anyway?" series. Part 1: "Boltzmann, Shannon, and Gibbs" is here.

Yes, let's talk about that second law in light of the fact we just established, namely that Boltzmann and Shannon entropy are fundamentally describing the same thing: they are measures of uncertainty applied to different realms of inquiry, making us thankful that Johnny vN was smart enough to see this right away.

The second law is usually written like this:

"When an isolated system approaches equilibrium from a non-equilibrium state, its entropy almost always increases"

I want to point out here that this is a very curious law, because there is, in fact, no proof for it. Really, there isn't. Not every thermodynamics textbook is honest enough to point this out, but I have been taught this early on, because I learned Thermodynamics from the East-German edition of Landau and Lifshitz's tome "Statistische Physik", which is quite forthcoming about this (in the English translation):

"At the present time, it is not certain whether the law of increase of entropy thus formulated can be derived from classical mechanics"

From that, L&L go on to speculate that the arrow of time may be a consequence of quantum mechanics.

I personally think that quantum mechanics has nothing to do with it (but see further below). The reason the law cannot be derived is because it does not exist.

I know, I know. Deafening silence. Then:

"What do you mean? Obviously the law exists!"

What I mean, to be more precise, is that strictly speaking Boltzmann's entropy cannot describe what goes on when a system not at equilibrium approaches said equilibrium, because Boltzmann's entropy is an equilibrium concept. It describes the value that is approached when a system equilibrates. It cannot describe its value as it approaches that constant. Yes, Boltzmann's entropy is a constant: it counts how many microstates can be taken on by a system at fixed energy.

When a system is not at equlibrium, fewer microstates are actually occupied by the system, but the number it could potentially take on is constant. Take, for example, the standard "perfume bottle" experiment that is so often used to illustrate the second law:

An open "perfume bottle" (left) about to release its molecules into the available space (right)

The entropy of the gas inside the bottle is usually described as being small, while the entropy of the gas on the right (because it occupies a large space) is believed to be large. But Boltzmann's formula is actually not applicable to the situation on the left, because it assumes (on account of the equilibrium condition), that the probability distributions in phase space of all particles involved are independent. But they are clearly not, because if I know the location of one of the particles in the bottle, I can make very good predictions about the other particles because they occupy such a confined space. (This is much less true for the particles in the larger space at right, obviously).

What should we do to correct this?

We need to come up with a formula for entropy that is not explicitly true only at equilibrium, and that allows us to quantify correlations between particles. Thermodynamics cannot do this, because equilibrium thermodynamics is precisely that theory that deals with systems whose correlations have decayed long ago, or as Feynman put it, systems "where all the fast things have happened but the slow things have not".

Shannon's formula, it turns out, does precisely what we are looking for: quantify correlations between all particles involved. Thus, Shannon's entropy describes, in a sense, nonequilibrium thermodynamics. Let me show you how.

Let's go back to Shannon's formula applied to a single molecule, described by a random variable A1, and call this entropy H(A1).

I want to point out right away something that may shock and disorient you, unless you followed the discussion in the post "What is Information? (Part 3: Everything is conditional)" that I mentioned earler. This entropy H(A1) is actually conditional. This will become important later, so just store this away for the moment.

OK. Now let's look at a two-atom gas. Our second atom is described by random variable A2, and you can see that we are assuming here that the atoms are distinguishable. I do this only for convenience, everything can be done just as well for indistinguishable particles.

If there are no correlations between the two atoms, then the entropy of the joint system H(A1A2)=H(A1)+H(A2), that is, entropy is extensive. Thermodynamical entropy is extensive because it describes things at equilibrium. Shannon entropy, on the other hand is not. It can describe things that are not at equilibrium, because then

H(A1A2)=H(A1)+H(A2)−H(A1:A2),

where H(A1:A2) is the correlation entropy, or shared entropy, or information, between A1 and A2. It is what allows you to predict something about A2 when you know A1, which is precisely what we already knew we could do in the picture of the molecules crammed into the perfume bottle on the left. This is stunning news for people who only know thermodynamics,

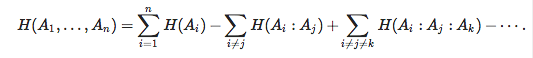

What if we have more particles? Well, we can quantify those correlations too. Say we have three variables, and the third one is (with very little surprise) described by variable A3. It is then a simple exercise to write the joint entropy H(A1A2A3) as

H(A1)+H(A2)+H(A3)−H(A1:A2)−H(A1:A3)−H(A2:A3)+H(A1:A2:A3)

Entropy Venn diagram for three random variables, with the correlation entropries indicated.

We find thus that the entropy of the joint system of variables can be written in terms of the extensive entropy (the sum of the subsystem entropies) minus the correlation entropy Hcorr, which inlcudes correlations between pairs of variables, triplets of variables, and so forth. Indeed, the joint entropy of an n-particle system can be written in terms of a sum that features the (extensive) sum of single-particle entropies plus (or minus) the possible many-particle correlation entropies (the sign always alternates between even and odd number of participating particles):

This formula quickly becomes cumbersome, which is why Shannon entropy isn't a very useful formulation of non-equilibrium thermodynamics unless the correlations are somehow confined to just a few variables.

Now, let's look at what happens when the gas in the bottle escapes into the larger area. Initially, the entropy is small, because the correlation entropy is large. Let's write this entropy as

where I is the information I have because I know that the molecules are in the bottle. You now see why the entropy is small: you know a lot (in fact, I) about the system. The unconditional piece is the entropy of the system when all the fast things (the molecules escaping the bottle) have happened.

Some of you may have already understood what happens when the bottle is opened: the informationI that I have (or any other observer, for that matter, has) decreases. And as a consequence, the conditional entropy H(A1,...,An|I) increases. It does so until I=0, and the maximum entropy state is achieved. Thus, what is usually written as the second law is really just the increase of the conditional entropy as information becomes outdated. Information, after all, is that which allows me to make predictions with accuracy better than chance. If the symbols that I have in my hand (and that I use to make the predictions) do not predict anymore, then they are not information anymore: they have turned to entropy. Indeed, in the end this is all the second law is about: how information turns into entropy.

You have probably already noticed that I could now take the vessel on the right of the figure above and open that one up. Then you realize that you did have information after all, namely you knew that the particles were confined to the larger area. This example teaches us that, as I pointed out in "What is Information? (Part I)", the entropy of a system is not a well-defined quantity unless we specify what measurement device we are going to use to measure it with, and as a consequence what the range of values of the measurements are going to be.

The original second law, being faulty, should therefore be reformulated like this:

In a thermodynamical equilibrium or non-equilibrium process, the unconditional (joint) entropy of a closed system remains a constant.

The "true second law", I propose, should read:

When an isolated system approaches equilibrium from a non-equilibrium state, its conditional entropy almost always increases

Well, that looks suspiciously like the old law, except with the word "conditional" in front of "entropy". It seems like an innocuous change, but it took two blog posts to get there, and I hope I have convinced you that this change is not at all trivial.

Now to close this part, let's return to Gibbs's entropy, which really looks exactly like Shannon's. And indeed, the pi in Gibbs's formula

could just as well refer to non-equilibrium distributions. If it does refer to equilibrium, we should use the Boltzmann distribution (I set here Boltzmann's constant to k=1, as it really just renormalizes the entropy)

where Z=∑ie−Ei/T is known as the "partition function" in thermodynamics (which just makes sure that the pi are correctly normalized), and Ei is the energy of the ith microstate. Oh yeah, T is the temperature, in case you were wondering.

If we plug this pi into Gibbs's (or Shannon's) formula, we get

This is, of course, a well-known thermodynamical relationship because F=−TlogZ is also known as the Helmholtz free energy, so that F=E−TS.

As we have just seen that this classical formula is the limiting case of using the Boltzmann (equilibrium) distribution within Gibbs's (or Shannon's) formula, we can be pretty confident that the relationship between information theory and thermodynamics I just described is sound.

As a last thought: how did von Neumann know that Shannon's formula was the (non-equilibrium) entropy of thermodynamics? He had been working on quantum statistical mechanics in 1927, and deduced that the quantum entropy should be written in terms of the quantum density matrix ρ as (here "Tr" stands for the matrix trace)

Quantum mechanical density matrices are in general non-diagonal. But were they to become classical, they would approach a diagonal matrix where all the elements on the diagonal are probabilities p1,...,pn. In that case, we just find

in other words, Shannon's formula is just the classical limit of the quantum entropy that was invented twenty one years before Shannon thought of it, and you can bet that Johnny immediately saw this!

In other words, there is a very good reason why Boltzmann's, Gibbs's, and Shannon's formulas are all called entropy, and Johnny von Neumann didn't make this suggestion to Shannon in jest.

Is this the end of "Whose entropy is it anyway?". Perhaps, but I have a lot more to write about the quantum notion of entropy, and whether considering quantum mechanical measurements can say anything about the arrow of time (as Landau and Lifshitz suggested). Because considering the quantum entropy of the universe can also say something about the evolution of our universe and the nature of the "Big Bang", perhaps a Part 3 will be appropriate.

Stay tuned!