Easy Search Less Easy Search

But problems of size $N$ (problems that take $N$ steps to solve) are still in the class of easy problems. A little harder are problems that take polynomial time to solve (like $N^2$ steps, or even $N^{10,000}$).

Problems of this type are said to belong to the class "P", where P stands for polynomial. They are (for the most part) easy for a computer. Things get problematic when the $N$ is not in the base, but in the exponent. Like, $2^N$ or $e^N$. The exponential function rises sooo much faster than any polynomial, that the difference between the two (polynomial versus exponential) is not one of degrees. It is one of substance.

Now, the Second thing: Suppose somebody hands you a sheet of paper and claims: "THIS is the solution to this extremely hard problem you have been talking about".

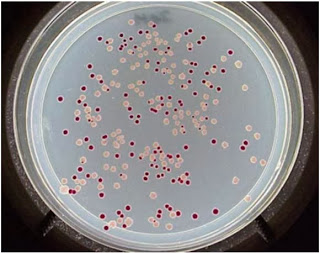

How long will it take you to confirm (or ridicule) said claim? You would think: "Pretty fast I gather", and you would be right (most of the time). In the example of the search above, if I tell you that the red dot is THAT ONE in the upper left corner, all you would have to say is: "OK, right, I see it". For the second panel, it's in the lower right corner (the blue dot). See, it's so much easier if I show you the solution!

There is a name for problems that are really hard, but for which any proposed solution can be checked really quickly. This class of problems is called "NP": the class of problems for which we can verify a solution in polynomial time, but may not be able to

find

it in polynomial time.

Strictly speaking, NP stands for "non-deterministic polynomial". It means that a computer very unlike the one you read this on (a non-deterministic one) could solve the problem in polynomial time. An unpredictable computer, so to speak. Maybe a quantum one. Maybe that has to remain the subject of a different post. One that speculates about whether it is obvious that there must be a classical algorithm that factorizes primes in polynomial time.

Let's just think of the problems in the class NP as those that we have no idea how to solve in polynomial time. I'm oversimplifying here, but this is a blog, not a textbook.

So, I can imagine that you would immediately agree that checking a solution is a hell of a lot faster than finding a solution. Because that makes sense.

We indeed believe that's true. But we do not know.

Let me give you an example hard problem to illustrate all this. It's kind of the grand-daddy of hard problems, perhaps in part because it is the one that Stephen Cook used to formulate the problem. In fact, he showed that if you could solve

that

problem, then you could solve many many others right away, because this grand-daddy is part of a class of problems that can all be related to each other (called the NP-complete problems). This is cool because it means that if you've solved

any

of the problems in the set in polynomial time, then you've solved them all in polynomial time.

This grand-daddy of problems is the 3-SAT problem, and I urge you to be awake through my description of it. (All other descriptions would put you to sleep much faster anyway.) If you can get through this, I promise that there are symphonies ahead.

"Do I really have to endure a 30 seconds lecture about 3-SAT?"

You brush your teeth, don't you? That's 60 seconds right there! (If not, you should reconsider your brushing routine. Just sayin'.)

So here it goes. 30 seconds.

You know about logic already. If A and B are two variables that can be either TRUE or FALSE, then you know whether A$\vee B$ is TRUE or FALSE, depending on the truth value of A and B. Now let's get C into it. I can now ask, for example, whether A$\vee$B$\vee\neg$ C is true. This $\neg$ symbol, by the way, is just the logical NOT. So, if A is TRUE, then $\neg$A is FALSE. And $\vee$ stands for OR, of course, while $\wedge$ simply means AND.

Well, you say, obviously, this clause A$\vee$B$\vee\neg$ C is TRUE if any of A or B is true, or C is false. Indeed, to check a single clause, that's easy. But what if we take two clauses (each made from three variables (three, get it, 3-SAT?) and ask whether the AND of these two clauses is true. Like, for example, (A$\vee$B$\vee\neg$ C)$\wedge (\neg$ B$\vee$C$\vee\neg$ D).

"Well OK", you say, now I have to determine whether each of the clauses is TRUE to figure out whether the AND of the two clauses is true. And I see what you did there: in any of the clauses, variables can reoccur. "And you're down 15 seconds, by the way. "

All right. Now imagine I have a given number of variables A,B,C, to whatever. Like N of them. And I make tons of clauses from them (each of them ORs of three variables), ANDed together. And I call the resulting expression a "statement". And I ask you the all-important question:

"Could the resulting statement ever be TRUE?"

It could look like this:

(R$\vee$T$\vee\neg$ C)$\wedge (\neg$ B$\vee$C$\vee\neg$ D)$\wedge(\neg$ W$\vee$B$\vee\neg$ M)$\wedge$(F$\vee\neg$ G$\vee$ D)$\wedge$(W$\vee$T$\vee\neg $R)

Or it could be a lot longer. Asking whether the statement can ever be TRUE is asking whether the statement can be "satisfied". And because the statement is composed of clauses with three variables, it is the "3-Satisfiability" problem: 3-SAT.

Now, I don't expect you to be able to answer this question just by looking at the statement. And, I also realize that my 30 seconds are up. But you could of course just test it. If there are $N$ variables, you just have to test $2^N$ possible cominations of TRUE and FALSE for these variables, plug them into the statement, and check if it ever returns 1. But you understand immediately that this will take a long time if $N$ is large. An exponential amount of time, because $2^N$ depends on $N$ exponentially.

(Really it does. Because $2^N=e^{\ln(2) N}$. If you are more comfortable with exponentials to the base e.)

Now, let me imagine for a moment that you have been thinking about this for longer than the 30 seconds it took you to read through this simple example. Please, let me indulge in that thought just for the sake of it.

You may have come up with this simple thought:

"What if..... every variable appears only once in any clause? Shouldn't it be obvious that some combination will result in a TRUE statement?"

Yes. Yes indeed, that is quite right. I'm impressed.

"But wait, wait. What if there are only, like, two variables A and B, but a gazillion clauses. Isn't it obvious that there are bound to be contradictions between clauses so that not all clauses could possibly be right at the same time (kind of like A and $\neg A$ cannot be both be TRUE at the same time! Am I right? Am I?"

Gosh, you. You're like, totally right. If that happens (a small number of variables, a large number of clauses) you can fairly quickly rule out that the statement can ever be TRUE. Indeed, figuring out whether a statement of many clauses but a small number of variables, or a large number of variables and just a few clauses, is usually quick and easy.

For anything inbetween, however, we think it'll take checking essentially all $2^N$ to figure out satisfiability. Sorry. Unless, of course, there is a trick nobody has yet thought of. An algorithm of unheard of power.

Remember when I wrote that I want you to think about two things? Two very different things?

The first was: How long does it take to solve a really hard problem? In the case of 3-SAT, we think it'll take an "NP" amount of time, on average. NP: a very very long time. Possibly as long as the universe has been around (for big $N$.). But we can check any proposed solution quickly.

Just imagine: someone gives you a sequence of variables A,B,C, etc. and claims that

"A=TRUE, B=FALSE, C=FALSE.... will satisfy the statement!"

You can check that in no time at all, by just plugging it into the formula. Voilà! Either that someone was right, or not. The time to check any proposed solution is polynomial in the problem size, that is, it is P.

Is P (time to check a solution) equal to NP (time to find a solution)? That's preposterous right? "No way!" you exclaim. Yet, nobody has ever been able to prove that this is not so. People have tried, and

even claimed they succeeded

.

So we have to entertain, at this point in time, the possibility that P=NP, as preposterous as that may sound. Because the people who have been trying to prove the opposite aren't exactly amateurs. There is, after all, a million bucks riding on it. So could it be that P=NP? What would the world be like it if it was true?

First, let us think about this: Is anything like this (P=NP) even possible at all? It would mean that we can create rare things that are "TRUE" (by some measure) as easily as we can check that any thing is TRUE (by some measure). Let's go back to 3-SAT to see what this means. Let's imagine that we deal with a hard case of 3-SAT: that there is actually only a single lonesome set of assignements to the variables that results in the statement to be TRUE. In that case, the statement is satisfiable, but proving it will require finding the proverbial needle in a haystack. We know that we can check every proposition quickly. But if we don't have any way of guesssing what a good solution is, then we have to check all of them. Checking each may be quick. Checking all is not, because there are an exponential number of candidates.

How could we find this one needle in a haystack? Is something like this even thinkable in principle?

It is indeed! Think of a different problem: creating a poignant and meaningful piece of poetry.

"That's ridiculous!", you exclaim, "How can you liken a creative process such as writing poetry to an automated procedure?"

Hold on there for a moment. Take you favorite piece of poetry. Think about it. Recite it in your head.

What, you don't have one? Really, you should spend a bit more time away from the computer, smell the roses, so to speak. What about this one, about the

thing with feathers

? No? What about

Hamlet's soliloquy

? Well, you should have a favorite piece of poetry, is all I'm saying. I have more, but I can tell you're getting tired of this discussion already.

Now, given the medium in which those pieces of poetry that I referred to are rendered (sequences of letters drawn from an alphabet of 26 symbols, plus the space), they are extraordinarily rare. I think I can even trust you to calculate how rare, if you imagine the "blind monkey" way of writing a poetry on a type writer. You know, the kind that (theoretically speaking) can reproduce all the works of Shakespeare when randomly hacking away at a keyboard.

![Incidents of infection with the "Stoned" virus over time, courtesy of [2].](https://images.squarespace-cdn.com/content/v1/53308268e4b00147ec53e8b4/1395928284364-1PXH8XG3GQAZQ2XQQNIH/image-asset.png)