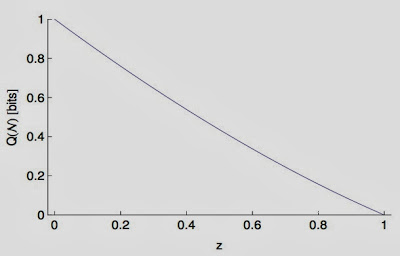

In that figure, I show you the capacity as a function of $z=e^{-\omega/T}$, where $T$ is the temperature of the black hole and $\omega$ is the frequency (or energy) of that mode. For a very large black hole the temperature is very low and, as a consequence, the channel isn't very noisy at all (low $z$). The capacity therefore is nearly perfect (close to 1 bit decoded for every bit sent). When black holes evaporate, they become hotter, and the channel becomes noisier (higher $z$). For infinitely small black holes ($z=1$) the capacity finally vanishes. But so does our understanding of physics, of course, so this is no big deal.

What this plot implies is that you can perfectly reconstruct the quantum state that Alice daringly sent into the white hole as long as the capacity $Q$ is larger than zero. (If the capacity is small, it would just take you longer to do the perfect reconstructing.). I want to make one thing clear here: the white hole is indeed an optimal cloning machine (the fidelity of cloning 1->2 is actually 5/6, for each of the two clones). But to recreate the quantum state perfectly, you have to do some more work, and that work requires both clones. But after you finished, the reconstructed state has fidelity $F=1$.)

"Big deal" you might say, "after all the white hole is a reflector!"

Actually, it is a somewhat big deal, because I can tell you that if it wasn't for that blue stimulated bit of radiation in that figure above, you couldn't do the reconstruction at all!

"But hold on hold on", I hear someone mutter, from far away. "There is an anti-clone behind the horizon! What do you make of that? Can you, like, reconstruct another perfect copy behind the horizon? And then have TWO?"

So, now we come to the second result of the paper. You actually cannot. The capacity of the channel into the black hole (what is known as the complementary channel) is actually zero because (and this is technical speak) the channel into the black hole is entanglement breaking. You can't reconstruct perfectly from a single clone or anti-clone, it turns out. So, the no-cloning theorem is saved.

Now let's come to the arguably more interesting bit: a perfectly absorbing black hole ($\alpha$=1). By inspecting the figure, you see that now I have a clone and an anti-clone behind the horizon, and a single clone outside (if I send in one particle). Nothing changes in the blue and red lines. But everything changes for the quantum channel. Now I can perfectly reconstruct the quantum state behind the horizon (as calculating the quantum capacity will reveal), but the capacity in front vanishes! Zero bits, nada, zilch. If $\alpha=1$, the channel from Alice to Bob is entanglement breaking.

It is as if somebody had switched the two sides of the black hole!

Inside becomes outside, and outside becomes inside!

Now let's calm down and ponder what this means. First: Bob is out of luck. Try as he might, he cannot have what Alice had: the same entanglement with $R$ that she enjoyed. Quantum entanglement is lost when the black hole is perfectly absorbing. We have to face this truth. I'll try to convince you later that this isn't really terrible. In fact it is all for the good. But right now you may not feel so good about it.

But there is some really good news. To really appreciate this good news, I have to introduce you to a celebrated law of gravity, the equivalence principle.

The principle, due to the fellow whose pic I have a little higher up in this post, is actually fairly profound. The general idea is that an observer should not be able to figure out whether she is, say, on Earth being glued to the surface by 1g, or whether she is really in a spaceship that accelerates at the rate of 1g (g being the constant of gravitational acceleration on Earth, you know: 9.81 m/sec$^2$). The equivalence principle has far reaching consequences. It also implies that an observer (called, say, Alice), who falls towards (and ultimately into) a black hole, should not be able to figure out when and where she passed the point of no return.

The horizon, in other words, should not appear as a special place to Alice at all. But if something dramatic would happen to quantum states that cross this boundary, Alice would have a sure-fire way to notice this change: she could just keep the quantum state in a protected manner at her disposition, and constantly probe this state to find out if anything happened to it. That's actually possible using so-called "non-demolition" experiments. So, unless you feel like violating another one of Einstein's edicts (and, frankly, the odds are against you if you do), you better hope nothing happens to a quantum state that crosses from the outside to the inside of a black hole in the perfect absorption case ($\alpha=1$).

Fortunately, we proved (result No. 3) that you can perfectly reconstruct the state behind the horizon when $\alpha=1$, that this capacity is non-zero. And that as a consequence, the equivalence principle is upheld.

This may not appear to you as much of a big deal when you read this, but many many researchers have been worried sick about this, that the dynamics they expect in black holes would spell curtains for the equivalence principle. I'll get back to this point, I promise. But before I do so, I should address a more pressing question.

"If Alice's quantum information can be perfectly reconstructed behind the horizon, what happens to it in the long run?"

This is a very serious question. Surely we would like Bob to be able to "read" Alice's quantum message (meaning he yearns to be entangled just like she was). But this message is now hidden behind the black hole event horizon. Bob is a patient man, but he'd like to know: "Will I ever receive this quantum info?"

The truth is, today we don't know how to answer this question. We understand that Alice's quantum state is safe and sound behind the horizon--for now. There is also no reason to think that the on going process of Hawking radiation (that leads to the evaporation of the black hole) should affect the absorbed quantum state. But at some point or other, the quantum black hole will become microscopic, so that our cherished laws of physics may lose their validity. At that point, all bets are off. We simply do not understand today what happens to quantum information hidden behind the horizon of a black hole, because we do not know how to calculate all the way to very small black holes.

Having said this, it is not inconceivable that at the end of a black hole's long long life, the only thing that happens is the disappearance of the horizon. If this happens, two clones are immediately available to an observer (the one that used to be on the outside, and the one that used to be inside), and Alice's quantum state could finally be resurrected by Bob, a person that no doubt would merit to be called the most patient quantum physicist in the history of all time.

Now what does this all mean for black hole physics?

I have previously shown that classical information is just fine, and that the universe remains predictable for all times. This is because to reconstruct classical information, a single stimulated clone is enough. It does not matter what $\alpha$ is, it could even be one. Quantum information can be conveyed accurately if the black hole is actually a white hole, but if it is utterly black then quantum information is stuck behind the horizon, even though we have a piece of it (a single clone) outside of the horizon. But that's not enough, and that's a good thing too, because we need the quantum state to be fully reconstructable inside of the black hole, otherwise the equivalence principle is hosed. And if it reconstructable inside, then you better hope it is not reconstructable outside, because otherwise the no-cloning theorem would be toast.

So everything turns out to be peachy, as long as nothing drastic happens to the quantum state inside the black hole. We have no evidence of something so drastic, but at this point we simply do not know.

Now what are the implications for black hole complementarity? The black hole complementarity principle was created from the notion (perhaps a little bit vague) that, somehow, quantum information is both reflected and absorbed by the black hole channel at the same time. Now, given that you have read this far in this seemingly interminable post, you know that this is not allowed. It really isn't. What Susskind, Thorlacius, and 't Hooft argued for, however, is that it is OK as long as you won't be caught. Because, they argued, nobody will be able to measure the quantum state on both sides of the horizon at the same time anyway!

Now I don't know about you, but I was raised believing that just because you can't be caught it doesn't make it alright to break the rules. And what our more careful analysis of quantum information interacting with a black hole has shown, is that you do not break the quantum cloning laws at all. Both the equivalence principle and the no-cloning theorem are perfectly fine. Nature just likes these laws, and black holes are no outlaws.