In 1863, the British writer Samuel Butler wrote a letter to the newspaper The Press entitled "Darwin Among the Machines". In this letter, Butler argued that machines had some sort of "mechanical life", and that machines would some day evolve to be more complex than people, and ultimately bring humanity into extinction:

"Day by day, however, the machines are gaining ground upon us; day by day we are becoming more subservient to them; more men are daily bound down as slaves to tend them, more men are daily devoting the energies of their whole lives to the development of mechanical life. The upshot is simply a question of time, but that the time will come when the machines will hold the real supremacy over the world and its inhabitants is what no person of a truly philosophic mind can for a moment question."

(S. Butler, 1863)

While futurist and doomsday prognosticator Ray Kurzweil would probably agree, I think that the realm of the machines is still far in the future. Here I would like to argue that Darwin isn't among the machines just yet, but he is certainly inside the machines.

The realization that you could observe life inside a computer was fairly big news in the early 1990s. The history of digital life has been chronicled before, but perhaps it is time for an update, because a lot has happened in twenty years. I will try to be brief: A Brief History of Digital Life. But you know how I have a tendency to fail in this department.

Who came up with the idea that life could be abstracted to such a degree that it could be implemented (mind you, this is not the same thing as simulated) inside a computer?

Why, that would be just the same guy who actually invented the computer architecture we all use! You all know who this is, but here's a pic anyway:

I don't have many scientific heroes, but he is perhaps my number one. He was first and foremost a mathematician (who also made fundamental contributions to theoretical physics). After he invented the von Neumann architecture of modern computers, he asked himself: could I create life in it?

Who would ask himself such a question? Well, Johnny did! He asked: if I could program an entity that contained the code that would create a copy of itself, would I have created life? Then he proceeded to try to program just such an entity, in terms of a cellular automaton (CA) that would self-replicate. Maybe you thought that Stephen Wolfram invented CAs? He might want to convince you of that, but the root of CAs goes back to Stanislaw Ulam, and indeed our hero Johnny. (If Johnny isn't your hero yet, I'll try to convince you that he should be. Did you know he invented Game Theory?) Johnny actually wrote a book called "Theory of Self-Reproducing Automata" that was only published after von Neumann's death. He died comparatively young, at age 54. Johnny was deeply involved in the Los Alamos effort to build an atomic bomb, and was present at the 1946 Bikini nuclear tests. He may have paid the ultimate prize for his service, dying of cancer likely due to radiation exposure. Incidentally Richard Feynman also succumbed to cancer from radiation, but he enjoyed a much longer life. We can only imagine what von Neumann could have given us had he had the privilege of living into his 90s, as for example Hans Bethe did. And just like that, I listed two other scientists on my hero list! They both are in my top 5.

All right, let's get back to terra firma. Johnny invented Cellular Automata just so that he can study self-replicating machines. What he did was create the universal constructor, albeit completely in theory. But he designed it in all detail: a 29-state cellular automaton that would (when executed) literally construct itself. It was a brave (and intricately detailed) construction, but he never got to implement it on an actual computer. This was done almost fifty years later by Nobili and Pesavento, who used a 32-state CA. They were able to show that von Neumann's construction ultimately was able to self-reproduce, and even led to the inheritance of mutations.

von Neumann used information coded in binary in a "tape" of cells to encode the actions of the automaton, which is quite remarkable given that DNA had yet to be discovered.

Perhaps because of von Neumann's untimely death, or perhaps because computers would soon be used for more "serious" applications than making self-replicating patterns, this work was not continued. It was only in the late 1980s that Artificial Life (in this case, creating a form of life inside of a computer) became fashionable again, when Chris Langton started the Artificial Life conferences in Santa Fe, New Mexico. While Langton's work focused also on implementations using CA, Steen Rasmussen at Los Alamos National Laboratory tried another approach: take the idea of computer viruses as a form of life seriously, and create life by giving self-replicating computer programs the ability to mutate. To do this, he created self-replicating computer programs out of a computer language that was known to support self-replication: "Redcode", the language used in the computer game Core War. In this game that was popular in the late 80s, the object is to force the opposing player's programs to terminate. One way to do this is to write a program that self-replicates.

Rasmussen created a simulated computer within a standard desktop, provided the self-replicator with a mutation instruction, and let it loose. What he saw was first of all quick proliferation of the self-replicator, followed by mutated programs that not only replicated inaccurately, but also wrote over the code of un-mutated copies. Soon enough no self-replicator with perfect fidelity would survive, and the entire population died out, inevitably. The experiment was in itself a failure, but it ultimately led to the emergence of digital life as we know it, because when Rasmussen demonstrated his "VENUS" simulator at the Santa Fe Institute, a young tropical ecologist was watching over his shoulder: Tom Ray of the University of Oklahoma. Tom quickly understood what he had to do in order to make the programs survive. First, he needed to give each program a write-protected space. Then, in order to make the programs evolvable, he needed to modify the programming language so that instructions did not refer directly to addresses in memory, simply because such a language turns out to be very fragile under mutations.

Ray went ahead and wrote his own simulator to implement these ideas, and called it tierra. Within the simulated world that Ray had created inside the computer, real information self-replicated, and mutated. I am writing "real" because clearly, the self-replicating programs are not simulated. They exist in the same manner as any computer program exists within a computer's memory: as instructions encoded in bits that themselves have a physical basis: different charge states of a capacitor.

The evolutionary dynamics that Ray observed was quite intricate. First, the 80 instruction-long self-replicator that Ray had painstakingly written himself started to evolve towards smaller sizes, shrinking, so to speak. And while Ray suspected that no program could self-replicate that was smaller than, say, 40 instructions long, he witnessed the sudden emergence of an organism that was only 20 lines long. These programs turned out to be parasites that "stole" the replication program of a "host" (while the programs were write-protected, Ray did not think he should insist on execution protection). Because the parasites did not need the "replication gene" they could be much smaller, and because the time it takes to make a copy is linear in the length of the program, these parasites replicated like crazy, and would threaten to drive the host programs to extinction.

But of course that wouldn't work, because the parasites relied on those hosts! Even better, before the parasites could wipe out the hosts, a new host emerged that could not be exploited by the parasite: the evolution of resistance. In fact, a very classic evolutionary arms race ensued, leading ultimately to a mutualistic society.

While what Ray observed was incontrovertibly evolution, the outcome of most experiments ended up being much of the same: shrinking program, evolution of parasites, an arms race, and ultimately coexistence. When I read that seminal 1992 paper [1] on a plane from Los Angeles to New York shortly after moving to the California Institute of Technology, I immediately started thinking about what one would need to do in order to make the programs do something useful. The tierran critters were getting energy for free, so they simply tried to replicate as fast as possible. But energy isn't free: programs should be doing work to gain that energy. And inside a computer, work is a calculation.

After my postdoctoral advisor Steve Koonin (a nuclear physicist, because the lab I moved to at Caltech was a nuclear theory lab) asked me (with a smirk) if I had liked any of the papers he had given me to read on the plane, I did not point to any of the light-cone QCD papers, I told him I liked that evolutionary one. He then asked: "Do you want to work on it?", and that was that.

I started to rewrite tierra so that programs had to do math in order to get energy. The result was this paper, but I wasn't quite happy with tierra. I wanted it to do much more: I wanted the digital critters to grow in a true 2D space (like, say, on a Petri dish)

and I wanted them to evolve a complex metabolism based on computations. But writing computer code in C wasn't my forte: I was an old-school Fortran programmer. So I figured I'd pawn the task off to some summer undergraduate students. Two were visiting Caltech that summer: C. Titus Brown who was an undergrad in Mathematics at Reed College, and Charles Ofria, a computer science undergrad at Stony Brook University, where I had gotten my Ph.D. a few years earlier. I knew both of them because Titus is the son of theoretical physicist Gerry Brown, in whose lab I obtained my Ph.D, and Charles used to go to high schoolwith Titus.

Above is a photo taken during the summer when the first version of Avida was written, and if you clicked on any of the links above then you know that Titus and Charles have moved on from being undergrads. In fact, as fate would have it we are all back together here at Michigan State University, as the photo below documents. Where we were attempting to recreate that old polaroid!

The version of the Avida program that ultimately led to 20 years of research in digital evolution (and utlimately became one of the cornerstones of the BEACON Center) was the one written by Charles. (Whenever I asked any of the two to just modify Tom Ray's tierra, they invariably proceeded by rewriting everything from scratch. I clearly had a lot to learn about programming.)

So what became of this digital revolution of digital evolution? Besides germinating the BEACON Center for the Study of Evolution in Action, Avida has been used for more and more sophisticated experiments in evolution, and we think that we aren't done by a long shot. Avida is also used to teach evolution, in high-school and college class rooms.

Whenever I give a talk or class on the history of digital life (or even its future), I seem to invariably get one question that wonders whether revealing the power that is immanent in evolving computer viruses is, to some extent, reckless.

You see, while the path to the digital critters that we call "avidians" was never really inspired by real computer viruses, you had to be daft not to notice the parallel.

What if real computer viruses could mutate and adapt to their environment, almost instantly negating any and all design efforts of the anti-malware industry? Was this a real possibility?

Whenever I was asked this question, in a public talk or privately, I would equivocate. I would waffle. I had no idea. After a while I told myself: "Shouldn't we know the answer to this question? Is it possible to create a new class of computer viruses that would make all existing cyber-security efforts obsolete?"

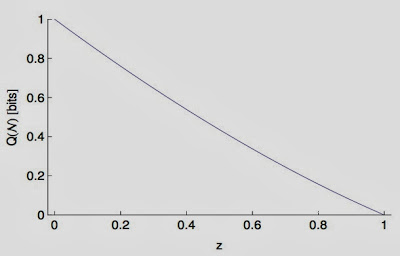

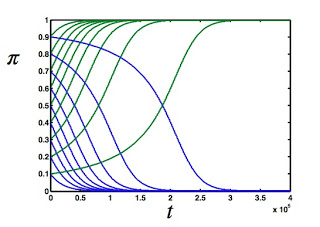

Because if you think about it, computer viruses (the kind that infect your computer once in a while if you're not careful) already displays some signs of life. I'll show you here that one of the earliest computer viruses (known as the "Stoned" family) displayed one sign, namely the tell-tale waxing and waning of infection rate as a function of time.

Why does the infection rate rise and fall? Well, because the designers of the operating system (the Stoned virus infected other computers only by direct contact: an infected floppy disk) were furiously working on thwarting this threat. But the virus designers (well, nobody called them that, really--they were called "hackers") were just as furiously working on defeating any and all countermeasures. A real co-evolutionary arms race ensued, and the result was that the different types of Stoned viruses created in response to the selective pressure imparted by operating system designers could be rendered in terms of a phylogeny of viruses that is very reminiscent of the phylogeny of actual biochemical viruses (think influenza, see below).

What if these viruse could mutate autonomously (like real biochemical viruses) rather than wait for the "intelligent design" of hackers? Is this possible?

I did not know the answer to this question, but in 2006 I decided to find out.

And to find out, I had to try as hard as I could to achieve the dreaded outcome. The thinking was: If my lab, trained in all ways to make things evolve, cannot succeed in creating the next-generation malware threat, then perhaps no-one can. Yes, I realize that this is nowhere near a proof. But we had to start somewhere. But if we were able to do this, then we would know the vulnerabilities of our current cyber-infrastructure long before the hackers did. We would be playing white hat vs. black hat, for real. But we would do this completely secretly.

In order to do this, I talked to a private foundation, which agreed to provide funds for my lab to investigate the question, provided we kept strict security protocols. No work is to be carried out on computers connected to the Internet. All notebooks are to be kept locked up in a safe. Virus code is only transferred from computer to computer via CD-ROM, also to be stored in a safe. There were several other protocol guidelines, which I will spare you. The day after I received notice that I was awarded the grant, I went and purchased a safe.

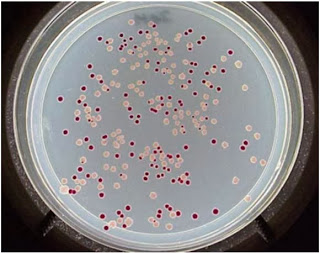

To cut a too long story into a caricature of "short": it turned out to be exceedingly difficult to create evolving computer viruses. I could devote an entire blog post to outline all the failed approached that we took (and I suspect that such a post would be useful for some segments of my readership). My graduate student Dimitris Iliopoulos set up a computer (disconnected, of course) with a split brain: one where the virus evolution would take place, and one that monitored the population of viruses that replicated--not in a simulated environment--but rather in the brutal reality of a real computer's operating system.

Dimitris discovered that the viruses did not evolve to be more virulent. They became as tame as possible. Because we had a "watcher" program monitoring the virus population, (culling individuals in order to keep the population size constant) programs evolved to escape the atttention of this program. Because being noticed by said program would spell death, ultimately.

This strategy of "hiding" turns out to be fairly well-known amongst biochemial viruses, of course. But our work was not all in vane. We contacted one of the leading experts in computer security at the time, Hungarian-born Péter Ször, who worked at the computer security company Symantec and wrote the book on computer viruses. He literally wrote it: you can buy it on Amazon here.

When we first discussed the idea with Péter, he was skeptical. But he soon warmed up to the idea, and provided us with countless examples of how computer viruses adapt--sometimes by accident, sometimes by design. We ended up writing a paper together on the subject, which was all the rage at the Virus Bulletin conference in Ottawa, in 2008 [3]. You can read our paper by clicking on this link here.

Which bring me, finally, to the primary reason why I am reminiscing about the history of digital life, and my collaboration with Péter Ször in particular. Péter passed away suddenly just a few days ago. He was 43 years old. He worked at Symantec for the majority of his career, but later switched to McAfee Labs as Senior Director of Malware Research. Péter kept your PC (if you choose to use such a machine) relatively free from this artfully engineered brand of viruses for decades. He worried whether evolution could ultimately outsmart his defenses and, at least for this brief moment in time, we thought we could.

[1] T.S. Ray, An approach to the synthesis of life, In: Langton, C., C. Taylor, J. D. Farmer, & S. Rasmussen [eds], Artificial Life II, Santa Fe Institute Studies in the Sciences of Complexity, vol. XI, 371-408. Redwood City, CA: Addison-Wesley.

[2] S. White, J. Kephart, D. Chess. Computer Viruses: A Global Perspective. In: Proceedings of the 5th Virus Bulletin International Conference, Boston, September 20-22, 1995, Virus Bulletin Ltd, Abingdon, England, pp. 165-181. September 1995

[3] D. Iliopoulos, C. Adami, and P. Ször. Darwin Inside the Machines: Malware Evolution and the Consequences for Computer Security (2009). In: Proceedings of VB2008 (Ottawa), H. Martin ed., pp. 187-194

![Incidents of infection with the "Stoned" virus over time, courtesy of [2].](https://images.squarespace-cdn.com/content/v1/53308268e4b00147ec53e8b4/1395928284364-1PXH8XG3GQAZQ2XQQNIH/image-asset.png)